Audit teams face more systems, more data, and tighter windows. Many steps are still manual. AI and automation can improve coverage and speed while keeping evidence clear. The key is to use simple steps, strong controls, and steady review.

Audits rely on repeatable rules. Automation can run those rules at scale and flag the odd items. This helps teams spend time on judgment, not data prep. It also creates a trackable trail of what was checked and why.

Leaders care about quality and cost. A good plan raises both at the same time. The first goal is not a new toolbox. It is a better way to plan, test, and document.

Start with risk. Use past findings, process maps, and data shape to rate risk by area. Add simple analytics such as trend lines and basic ratios. The risk view guides scope, timing, and materiality.

Write the plan in steps that a new person can follow. Name owners and due dates. List systems and data sources. Call out limits and how you will handle them. Clear plans reduce review time later.

Good inputs drive strong tests. Build a clean data set for each process. Keep fields, types, and sources in a short data map. Add checks for required fields, valid ranges, and code lists.

Set a file plan for workpapers. Use a simple folder and naming scheme that matches your steps. Include a readme in each folder with a short note on what it holds. People find files faster and reviews move with less back and forth.

Pick controls with clear rules. Good examples include user access reviews, maker checker checks, vendor master changes, and three way match. Use scripts or rules to pull samples, compare fields, and show fails.

Keep a log for each test with date, input source, logic used, and result. Route fails to the team with a clear status and owner. This speeds review and builds a clean trail for external checks.

Link data from ledgers, subledgers, and source files. Run joins and checks for missing keys, duplicates, and round sum patterns. Use simple models to rank risk, such as large journal entries near period end or entries at odd hours.

Treat a score as a pointer, not proof. A human tests the item, adds notes, and sets a final status. This mix of ranking plus judgment keeps quality high and bias low.

Use random or stratified sampling for a fair base. Add a risk ranked layer to focus on items that look odd. Define thresholds and review rules. Keep the math simple and explainable to non technical readers.

Record how you chose the sample. Save the seed and the rules. This helps reviewers repeat the steps and removes debate about method.

For high risk areas, run a small set of daily or weekly checks. Track trends and alert on changes that cross a limit. Start with two or three checks per area to keep noise low.

Use the results to plan fieldwork and follow ups. Share simple charts with process owners. When they see trends early, fixes arrive earlier, and audit work is lighter.

Every test should leave a clear trail. Save inputs, logic, outputs, and a short human note that explains the call. Record who did the work and when. Keep version notes for scripts and rules.

This makes peer review and external review smoother. It also helps next year’s team move faster because they can follow the path without long calls.

Set roles so tool builders and audit users are not the same people. Review model logic and data for bias. Limit access to sensitive data. Use named service accounts for any system pulls and keep keys in a safe store.

Write a short ethics note for each project. State the goal, the data used, and the guardrails. Keep it in the workpapers so anyone can find it during review.

Map each test to a standard or a policy. Link evidence to that map so a reviewer can follow the chain. Run a weekly quality check on a small sample of files. Track issues, fix root causes, and share lessons.

Keep a playbook that lists common tests, data sets, and checks. Update it after each project. Over time, this raises quality and cuts planning time.

Start with tools you have. Many audit shops already use a script tool, a data grid, and a log store. Check if they meet your needs before you add new tools. When you add, test for access control, logs, and simple links to your stack.

Avoid complex builds early on. Pick tools that let you ship a pilot in weeks. Focus on explainable logic, exportable evidence, and easy review.

Choose one process such as payables or payroll. Limit the scope to one unit. Define success rules before you start. Examples include cycle time per test, number of fails caught, and review time per item.

Hold a short standup each week. Review metrics, blockers, and next steps. Close the pilot with a short report that lists results, gaps, fixes, and a plan to scale or stop. Share the report so leaders can decide fast.

Pick a small set of metrics. Useful ones include percent of population covered, time per test, number of exceptions, and time to resolve a fail. Capture before and after data so the view is fair.

Value is not only hours saved. Better coverage lowers risk. Faster review frees time for judgment and coaching. Clear logs reduce repeat questions from reviewers. Put these gains in a simple one page view.

After a good pilot, add one more process. Keep the same steps. Reuse data maps, scripts, and checklists. Add a monthly forum where teams share lessons. This keeps growth steady and reduces duplicate work.

Create a short heat map of processes by risk and value. Use it to plan the next two quarters. Small, steady moves beat large, complex jumps.

Pick one process and one unit. Write the plan, data map, and success rules. Run a short pilot. Keep logs and evidence clean. Share results and expand with the same habits. This is how AI improves audit work while keeping trust high.

A practical comparison of hiring a freelancer vs using a dedicated offshore accounting team, focusing on continuity, quality control, security, and scaling.

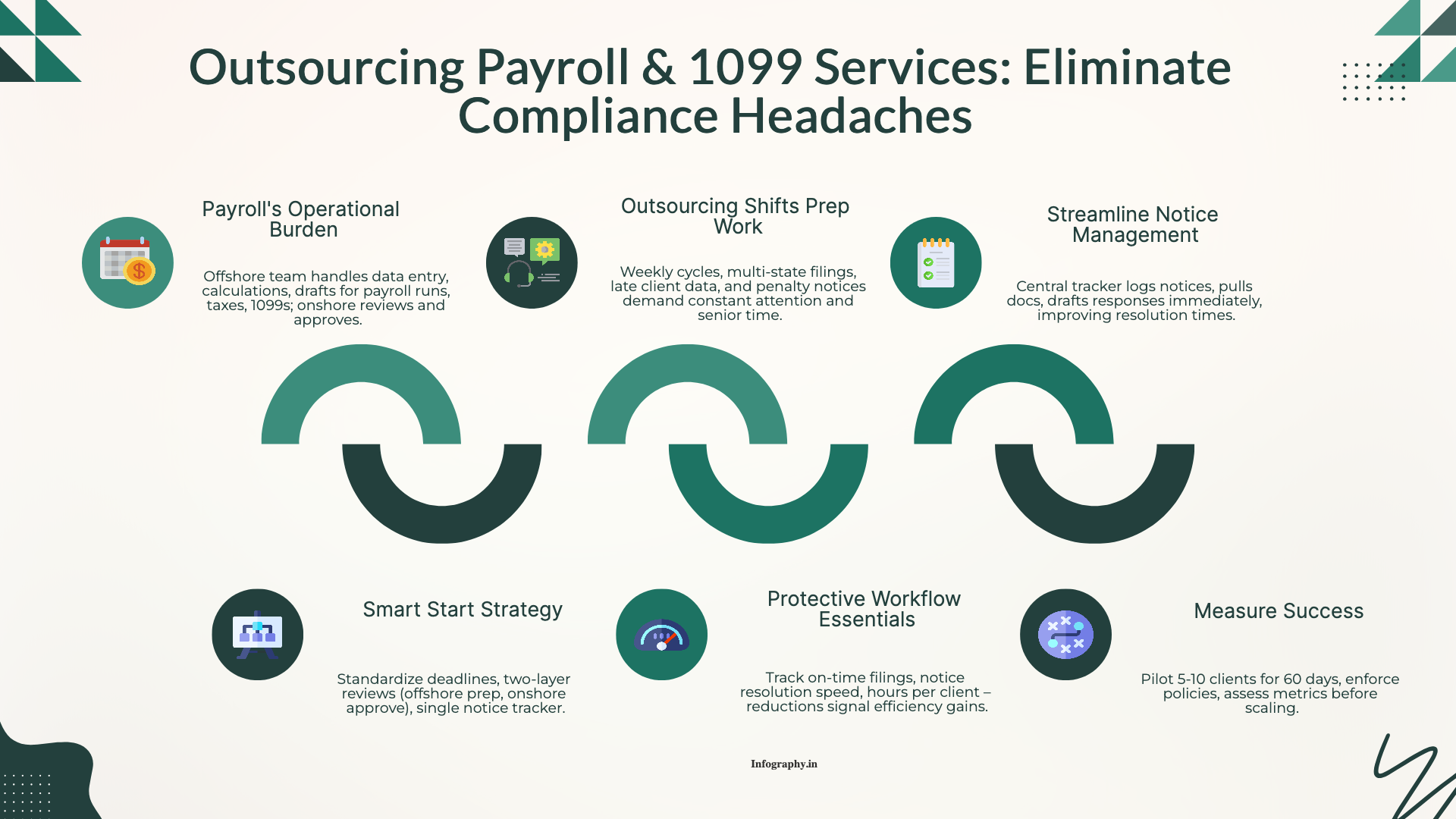

How CPA firms outsource payroll and 1099 work to reduce penalties and admin load, with a clean workflow for approvals, filings, and year-end reporting.

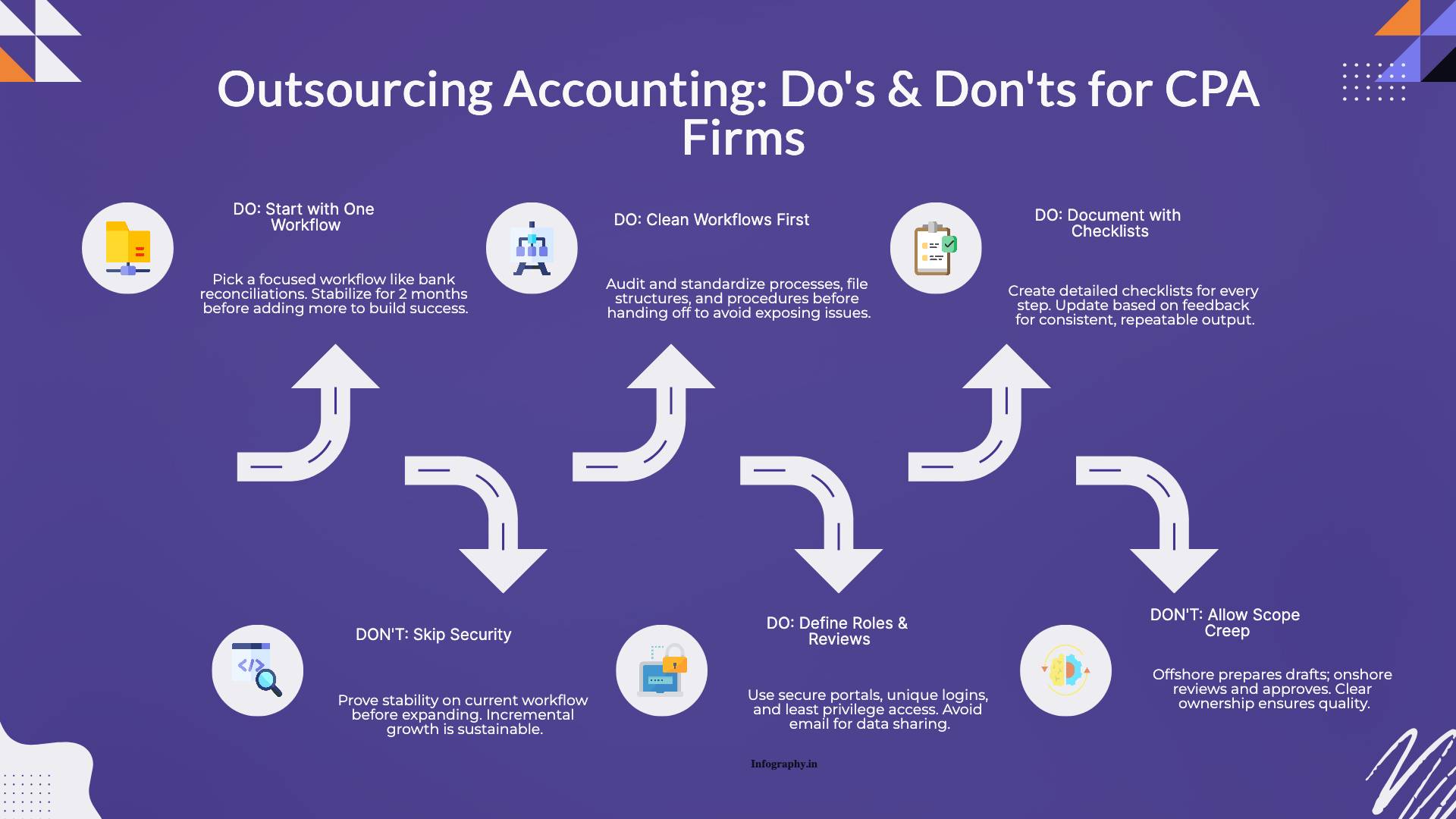

Practical do's and don'ts for CPA firms outsourcing accounting work, based on common failure points and what successful rollouts do differently.